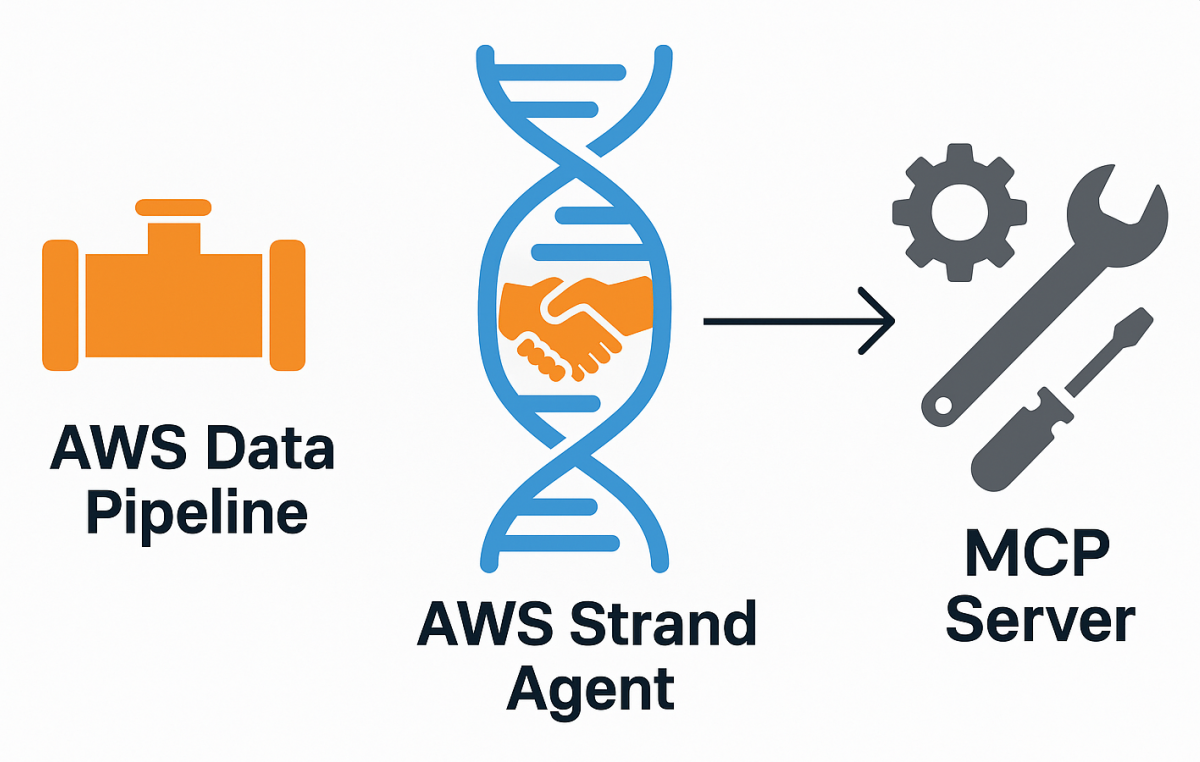

This project demonstrates the power of AWS strand agents and how quickly you can build intelligent, context-aware applications. AWS provides a robust foundation for creating agents that can understand, reason, and act on complex data – and with Model Context Protocol (MCP) integration, you can extend these capabilities seamlessly.

Why AWS Strand Agents?

- Model Driven Orchestration: Strands leverages model reasoning to plan, orchestrate tasks, and reflect on goals

- Model and Provider Agnostic: Work with any LLM provider – Amazon Bedrock, OpenAI, Anthropic, local models. Switch providers without changing your code.

- Simple MultiAgent Primitives: Simple primitives for handoffs, swarms, and graph workflows with built-in support for A2A

- Best in-class AWS integration: Native tools for AWS service interactions. Deploy easily into EKS, Lambda, EC2, and more. Native MCP tool integration.

What is an MCP Server?

An MCP (Model Context Protocol) Server is a lightweight, modular service that exposes tools or functions in a standard interface so they can be used by AI agents, workflows, or external systems. Built on the FastMCP framework, it allows developers to quickly register Python functions as callable tools over stdin, REST, or other transports — enabling LLM-driven automation, tool use, and orchestration.

NOTE: To learn more about developing a MCP server from scratch, check out – https://aiinfrahub.com/about-us/building-an-mcp-server-using-fastmcp-and-arxiv/

Project Goal

The goal of this project is to:

- Let a Strand Agent query , list and brief about research papers

- Let the Strand Agent leverage model to do reasoning and call the appropriate tool

- Let the Strand Agent fetch the tools output(context) and feed the context to LLM to build response

- Demonstrate how LLM calls the Researcher tools:

- search_arxiv

- get_paper_info

This is useful in research automation, literature reviews, or building academic copilots.

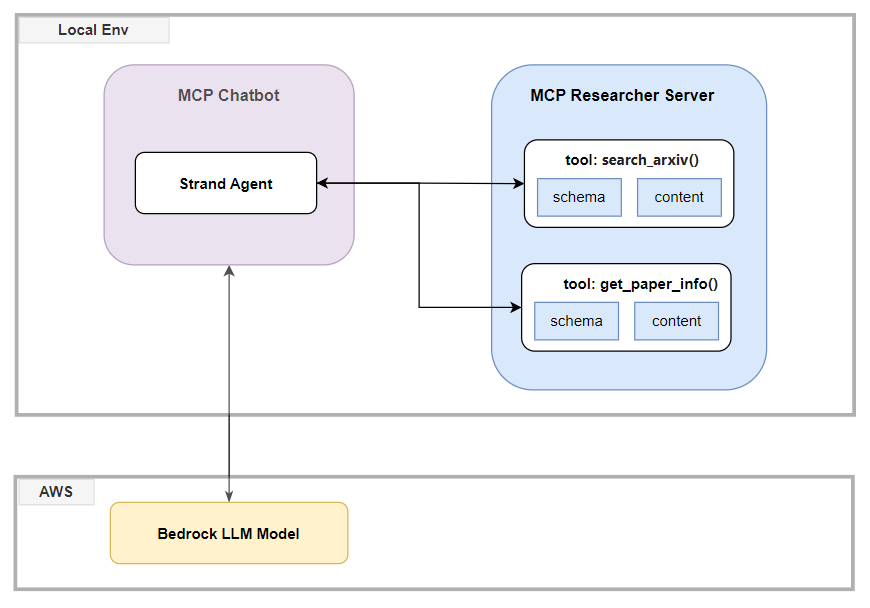

Architecture

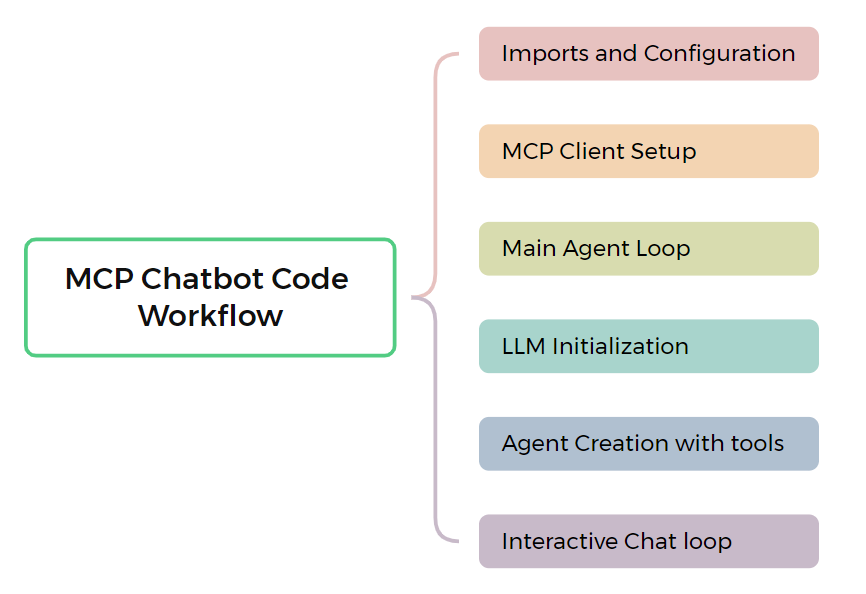

MCP Chatbot Workflow

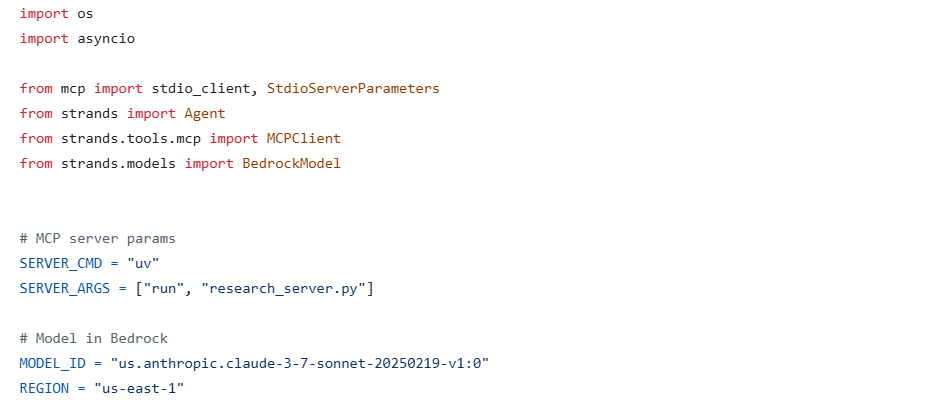

Imports and Configuration

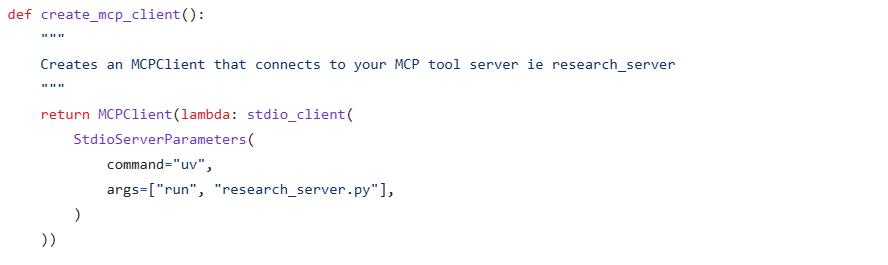

MCP Client Setup

This wraps your FastMCP server (research_server.py) as a subprocess via uv, using stdio transport. It returns a MCPClient instance that can:

- Discover tools,

- Execute them when the agent calls.

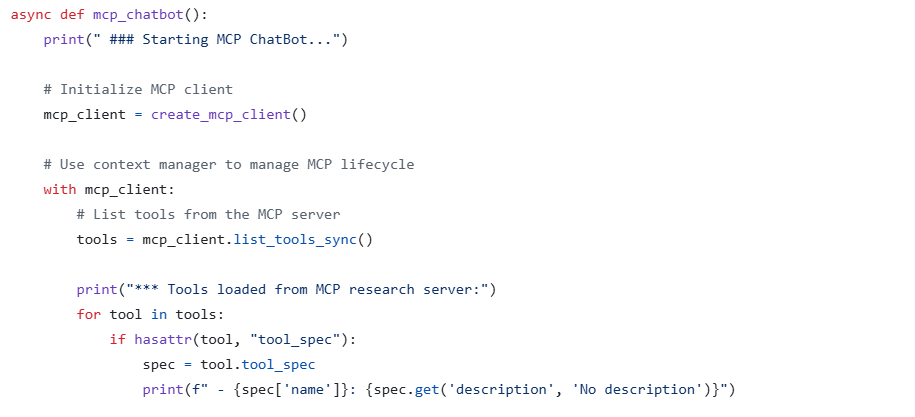

Researcher server tool discovery

This connects to the server and discovers available @mcp.tool() functions exposed by research_server.py

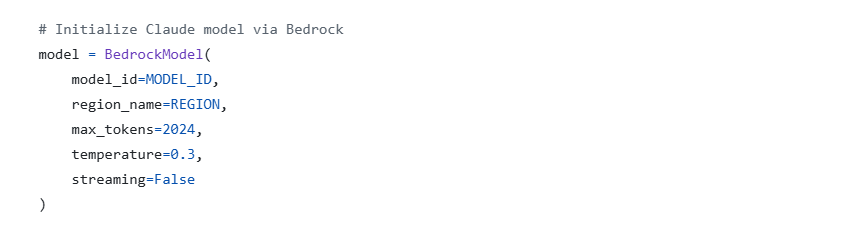

LLM Initialization

This will set up Bedrock LLM backend. We can control temperature, max_tokens etc

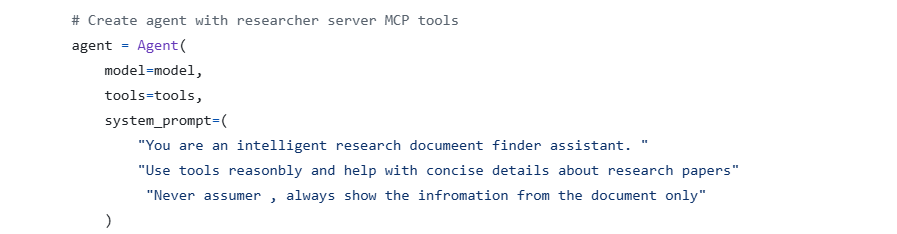

Agent creation with tools

The agent is now:

- LLM-powered

- Tool-augmented (via MCP)

- Context-aware (with a focused system prompt).

Note: The prompt enforces tool usage and citation discipline, so the agent only responds based on real documents, no assumption.

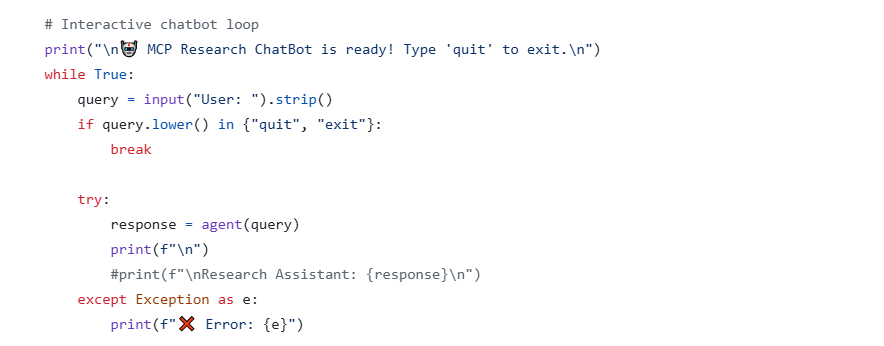

Interactive chat loop

CLI-style interface for easy testing, Sends user queries to the Agent, which:

- Parses intent,

- Selects tools (from the MCP server),

- Uses the LLM to formulate a response.

Running the Agent – mcp_chatbot

git clone https://github.com/juggarnautss/Strand_Agent_MCP_Server.git

uv init

uv pip install -r requirements.txt

python mcp_chatbot.py

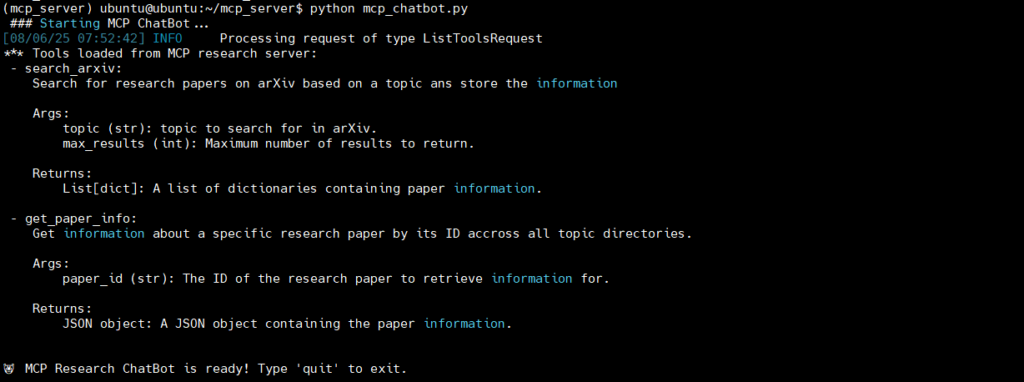

CLI screen of chatbot:

We can see tools of mcp server are getting listed as part of chatbot initialization.

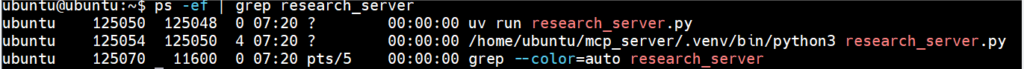

Researcher server:

Testing the Agent – mcp_chatbot

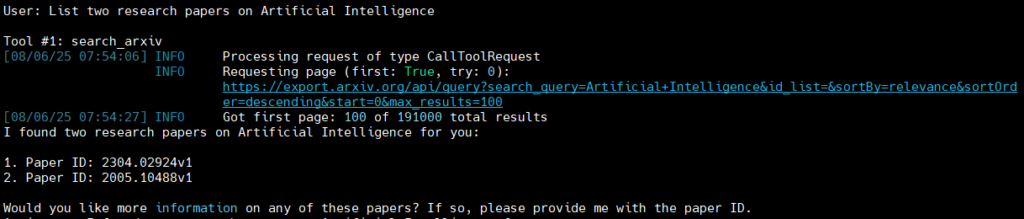

User: List two research papers on Artificial IntelligenceWe see that bedrock backend LLM has reasoned and selected the mcp tool “search_arxiv()” to fetch paper ids ie Strands leverages model reasoning to plan, orchestrate tasks, and reflect on goals

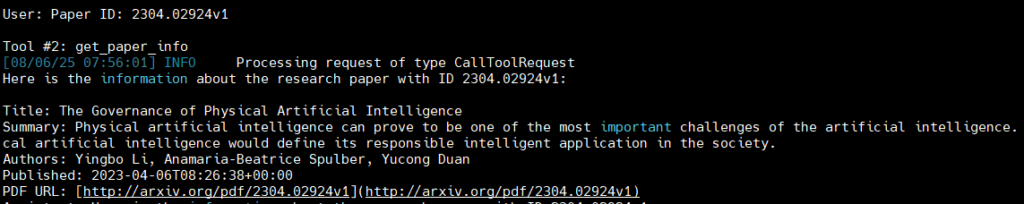

User: Paper ID: 2304.02924v1LLM now auto-selecting the tool “get_paper_info()” to get the paper details.

Conclusion

In this blog, we explored how to build an intelligent, tool-augmented research assistant using Amazon Strands Agents and the Model Context Protocol (MCP).

By connecting a bedrock model as backend to an agent and powered with custom MCP server, we enabled the agent to reason and interact with real research tools in a structured, reliable way.

This setup demonstrates the core strength of Strands Agents—model driven orchestration—allowing developers to build modular, extensible AI systems that can scale across use cases like research, DevOps, and more.

References

Building an MCP Server using FastMCP and arXiv

Github: Strand Agent MCP Server

Author Profile

- AI | Amplifying Impact

- Talks about AI | GenAI | Machine Learning | Cloud | Kubernetes

Latest entries

AgenticAIAugust 12, 2025Pipeline Companion – an AWS Strands Agent for Data Pipeline Monitoring

AgenticAIAugust 12, 2025Pipeline Companion – an AWS Strands Agent for Data Pipeline Monitoring AgenticAIAugust 7, 2025AWS Strand Agent – integration with Researcher MCP server

AgenticAIAugust 7, 2025AWS Strand Agent – integration with Researcher MCP server AgenticAIAugust 5, 2025Building an MCP Server Using FastMCP and arXiv

AgenticAIAugust 5, 2025Building an MCP Server Using FastMCP and arXiv AgenticAIAugust 3, 2025Building a Resume Question-Answering System Using LlamaIndex, OpenAI, and LlamaParse

AgenticAIAugust 3, 2025Building a Resume Question-Answering System Using LlamaIndex, OpenAI, and LlamaParse